Particle Filter Localization Project

Project Material

- Objectives

- Deliverables

- Deadlines & Submission

- The Particle Filter Localization Description

- Running the Code

- Working with the Map

- A View of the Goal

- Getting Out of the House: Navigation to a Goal Location

- Helpful Tips

- A Personal Note

Objectives

Your goal in this project is to gain in-depth knowledge and experience with solving problem of robot localization using the particle filter algorithm. This problem set is designed to give you the opportunity to learn about probabilistic approaches within robotics and to continue to grow your skills in robot programming. Like before, If you have any questions about this project or find yourself getting stuck, please post on the course Slack or send a Slack DM to the teaching team. Even if you don't find yourself hitting roadblocks, feel free to share with your peers what's working well for you.

Learning Goals

- Continue gaining experience with ROS and robot programming

- Gain a hands-on learning experience with robot localization, and specifically the particle filter algorithm

- Learn about probabilistic approaches to solving robotics problems

Teaming & Logistics

You are expected to work with 1 other student for this project. If you strongly prefer working by yourself, please reach out to the teaching team to discuss your individual case. A team of 3 will only be allowed if there is an odd number of students. Your team will submit your code and writeup together (in 1 Github repo).

Deliverables

Like last project, you'll submit this project on Github (both the code and the writeup). Have one team member fork our starter git repo to get our starter code and so that we can track your project. All team members will contribute code to this one forked repo.

Implementation Plan

Please put your implementation plan within your README.md file. Your implementation plan should contain the following:

- The names of your team members

-

A 1-2 sentence description of how your team plans to implement each of the following components of the particle filter localization as well as a 1-2 sentence description of how you will test each component:

- How you will initialize your particle cloud (

initialize_particle_cloud)? - How you will update the position of the particles will be updated based on the movements of the robot (

update_particles_with_motion_model)? - How you will compute the importance weights of each particle after receiving the robot's laser scan data?(

update_particle_weights_with_measurement_model)? - How you will normalize the particles' importance weights (

normalize_particles) and resample the particles (resample_particles)? - How you will update the estimated pose of the robot (

update_estimated_robot_pose)? - How you will incorporate noise into your particle filter localization?

- How you will initialize your particle cloud (

- A brief timeline sketching out when you would like to have accomplished each of the components listed above.

Writeup

Like last project, please modify the README.md file as your writeup for this project. Please add pictures, Youtube videos, and/or embedded animated gifs to showcase and describe your work. Your writeup should:

- Objectives description (2-3 sentences): Describe the goal of this project.

- High-level description (1 paragraph): At a high-level, describe how you solved the problem of robot localization. What are the main components of your approach?

-

For each of the main steps of the particle filter localization, please provide the following:

- Code location (1-3 sentences): Please describe where in your code you implemented this step of the particle filter localization.

- Functions/code description (1-3 sentences per function / portion of code): Describe the structure of your code. For the functions you wrote, describe what each of them does and how they contribute to this step of the particle filter localization.

- Initialization of particle cloud,

- Movement model,

- Measurement model,

- Resampling,

- Incorporation of noise,

- Updating estimated robot pose, and

- Optimization of parameters.

- Challenges (1 paragraph): Describe the challenges you faced and how you overcame them.

- Future work (1 paragraph): If you had more time, how would you improve your particle filter localization?

- Takeaways (at least 2 bullet points with 2-3 sentences per bullet point): What are your key takeaways from this project that would help you/others in future robot programming assignments working in pairs? For each takeaway, provide a few sentences of elaboration.

Code

Our starter git repo / ROS package contains the following files:

particle_filter_project/launch/navigate_to_goal.launch

particle_filter_project/launch/visualize_particle.launch

particle_filter_project/map/house_map.pgm

particle_filter_project/map/house_map.yaml

particle_filter_project/rviz/particle_filter_project.rviz

particle_filter_project/scripts/particle_filter.py

particle_filter_project/CMakeLists.txt

particle_filter_project/package.xml

particle_filter_project/README.md

You will write your code within the particle_filter.py file we've provided. You may also create other python scripts that may provide helper functions for the main steps of the particle filter localization contained within particle_filter.py.

Please remember that when grading your code, we'll be looking for:

- Object-oriented programs

- Readable variable names

- At minimum, light commenting of your code (e.g., one comment per function, one comment per conditional, one comment per loop, ~ 1 comment per 10 lines of code or so)

gif

At the beginning or end of your writeup please include a gif of one of your most successful particle filter localization runs. In your gif, (at minimum) please show what's happening in your rviz window. We should see the particles in your particle filter localization (visualized in rviz) converging on the actual location of the robot.

Note that, unfortunately, there are no recording options directly on rviz. If you are running on Windows/MacOS with NoMachine, we recommend that you record your NoMachine's rviz window directly from your base OS since CSIL machines don't come with screen recorders.

If you are on MacOS, QuickTimePlayer would be a great out-of-the-box option for screen recording. On Windows, a few options work great such as OBS Studio, or the Windows 10 builtin Game bar. On Ubuntu, one option would be Simple Screen Recorder

rosbag

Record a run of your particle filter localization in a rosbag. Please record the following topics: /map, /scan, /cmd_vel, /particle_cloud, /estimated_robot_pose, and any other topics you generate and use in your particle filter localization project. Please do not record all of the topics, since the camera topics make the rosbags very large. For ease of use, here's how to record a rosbag:

$ rosbag record -O filename.bag topic-namesPartner Contributions Survey

The final deliverable is ensuring that each team member completes this Partner Contributions Google Survey. The purpose of this survey is to accurately capture the contributions of each partner to your combined particle filter localization project deliverables.

Grading

The Partile Filter Project will be graded as follows:

- 5% Implementation Plan

-

23% Writeup

- 5% Objectives, High-level description, & gif

- 12% Main steps

- 6% Challenges, Future Work, Takeaways

- 2% ROS Bag Recordings

- 10% Individual Contributions

- 60% Code

- 48% Implementation of Main Components

- 8% Code Comments & Readability

- 4% Parameter Optimization/Experimentation

Deadlines & Submission

- Wednesday, Apr 14 11:00am CST - Implementation Plan

- Monday, Apr 26 11:00am CST - Code, Writeup, gif, rosbag, Partner Contributions Survey

Submission

As was true with the warmup project, we will consider your latest commit before 11:00 AM CST as your submission for each deadline. Do not forget to push your changes to your forked github repos. If you want to use any of your flex late hours for this assignment, please email us and let us know (so we know to clone your code at the appropriate commit for grading).

The Particle Filter Localization

The goal of our particle filter localization (i.e., Monte Carlo localization) will be to help a robot answer the question of "where am I"? This problem assumes that the robot has a map of its environment, however, the robot either does not know or is unsure of its position and orientation within that environment.

The way that a robot determines its location using a particle filter localization is similar to how people used to find their way around unfamiliar places using physical maps (yes, before Google Maps, before cell phones... aka the dark ages). In order to determine your location on a map, you would look around for landmarks or street signs, and then try to find places on the map that matched what you were seeing in real life.

The particle filter localization makes many guesses (particles) for where it might think the robot could be, all over the map. Then, it compares what it's seeing (using its sensors) with what each guess (each particle) would see. Guesses that see similar things to the robot are more likely to be the true position of the robot. As the robot moves around in its environment, it should become clearer and clearer which guesses are the ones that are most likely to correspond to the actual robot's position.

In more detail, the particle filter localization first initializes a set of particles in random locations and orientations within the map and then iterates over the following steps until the particles have converged to (hopefully) the position of the robot:

- Capture the movement of the robot from the robot's odometry

- Update the position and orientation of each of the particles based on the robot's movement

- Compare the laser scan of the robot with the hypothetical laser scan of each particle, assigning each particle a weight that corresponds to how similar the particle's hypothetical laser scan is to the robot's laser scan

- Resample with replacement a new set of particles probabilistically according to the particle weights

- Update your estimate of the robot's location

Running the Code

When testing and running your particle filter code, you'll have the following commands running in different terminals or terminal tabs.

First terminal: run roscore.

$ roscoreSecond terminal: run your Gazebo simulator. For this project, we're using the Turtlebot3 house environment.

$ roslaunch turtlebot3_gazebo turtlebot3_house.launch

Third terminal: launch the launchfile that we've constructed that 1) starts up the map server, 2) sets up some important coordinate transformations, and 3) runs rviz with some helpful configurations already in place for you (visualizing the map, particle cloud, robot location). If the map doesn't show up when you run this command, we recommend shutting down all of your terminals (including roscore) and starting them all up again in the order we present here.

$ roslaunch particle_filter_project visualize_particles.launchFourth terminal: run the Turtlebot3 provided code to teleoperate the robot.

$ roslaunch turtlebot3_teleop turtlebot3_teleop_key.launchFifth terminal: run your particle filter code.

$ rosrun particle_filter_project particle_filter.pyWorking with the Map

A large component of step #3 of implementing the particle filter (see "The Particle Filter" section above), is determining the hypothetical laser scan of each particle so that it can be compared to the actual robot's laser scan. In order to calculate properties of each particle's hypothetical laser scan, you'll need to work with the particle filter's map attribute, which is of type nav_msgs/OccupancyGrid. The map data list (data) uses row-major ordering and the map info contains useful information about the width, height, resolution, and more. The origin of the map is in the bottom left corner in the visualization of the map below.

A View of the Goal

The following progression of screenshots is from my implementation of the particle filter for this project. You can see that at the beginning, the particles are randomly distributed throughout the map. Over time, the particles converge on likely locations of the robot, based on its sensor measurements. And eventually, the particles converge on the true location of the robot.

Here are a few gifs that better portray the progression of the particle clouds,

Getting Out of the House: Navigation to a Goal Location

Once you have successfully localized your robot, you can also give your robot a goal location and have your robot move there (with the help of some Turtlebot3 navigation libraries). Your goal is to get out of the house (navigate out the front door) once your robot has localized itself. Here's how to do it:

- Implement the particle filter (as described above) to localize your robot

-

In your teleoperation terminal where you ran roslaunch turtlebot3_teleop turtlebot3_teleop_key.launch, hit the

skey to stop your robot. -

Ctrl+C both:

- the teleoperation terminal where you ran roslaunch turtlebot3_teleop turtlebot3_teleop_key.launch

- the particle filter terminal where you ran rosrun particle_filter_project particle_filter.py

-

In a new terminal, run:

$ roslaunch particle_filter_project navigate_to_goal.launch -

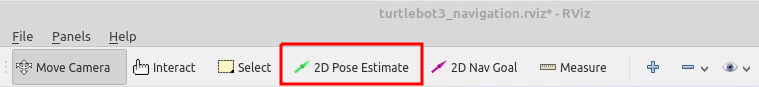

In your RViz window, click the

2D Pose Estimatebutton and match the 2D pose estimate with your estimated robot pose (/estimated_robot_pose). (Once you click the2D Pose Estimatebutton, click the location of your estimated robot pose and drag the mouse to set the orientation.)

-

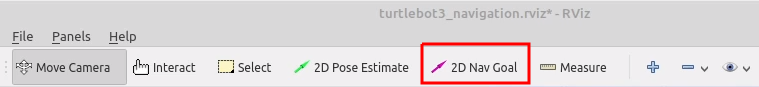

Next, in your RViz window, click the

2D Nav Goalbutton and place the 2D Nav Goal arrow outside the front door of the house (next to the mailbox). As soon as you do this, the robot should start moving to the location you set and should successfully get out of the house!

At this point your robot should have successfully navigated outside the house! If you would like, you can include a gif recording of your robot getting out of the house in your writeup. However, this is not required and your grade will be the same either way. Through this navigation portion, we simply want to expose you to the built-in navigation capabilities that the Turtlebot3 ROS libraries provide.

Helpful Tips

- If your map isn't coming up in rviz, shutdown all of your terminals, and re-run them in the order listed in "Running the Code".

- Start out using a small number of particles in your particle cloud, so you can make sure they're acting as you expect before scaling up the number of particles.

- Depending on the efficiency of your implementation, you may get exceptions regarding Gazebo's time going backwards. In such cases, decrease the number of your particles and try running your localization again.

- It is possible that your Localization initially converges to multiple clouds. However, after a longer period of time, your particles should converge to one cloud.

- Both the sensors and motors generate noise within the Gazebo world. We suggest that you counteract Gazebo noise in your particle cloud update step by introducing artificial noise to the particles at each iteration. An easy way to generate artificial noise is to sample it from a normal distribution (Gaussian noise).

- Test your code early and often. It's much easier to incrementally debug your code than to write up the whole thing and then start debugging.

A Personal Note

In many ways, I would not be a professor at UChicago and teaching this class had it not been for the particle filter that I programmed during my undergrad at Franklin W. Olin College of Engineering. My partner in crime, Keely, and I took a semester to learn about algorithms used in self-driving cars (under the guidance of Professor Lynn Stein) and implemented our own particle filter on a real robot within a maze that we constructed ourselves. It was this project that propelled me to get my PhD in Computer Science studying human-robot interaction, which then led me to UChicago. Below, I've included some photos of our project.

And here's the video of our particle filter working, where you can see the particles (blue) converging to the location of the robot (red).

Acknowledgments

The design of this course project was influenced by the particle filter project that Keely Haverstock and I completed in Fall 2013 at Olin College of Engineering as well as Paul Ruvolo and his Fall 2020 A Computational Introduction to Robotics course taught at Olin College of Engineering.