Class Meeting 10: Robot Vision

Today's Class Meeting

- Learning more about computer vision (CV) with an eye towards robotics applications - here's a link to the slides

- Implementing a vision algorithm that can detect images of cats (using our Turtlebot3 camera in Gazebo)

What You'll Need for Today's Class

- Your Ubuntu 20.04 programming environment

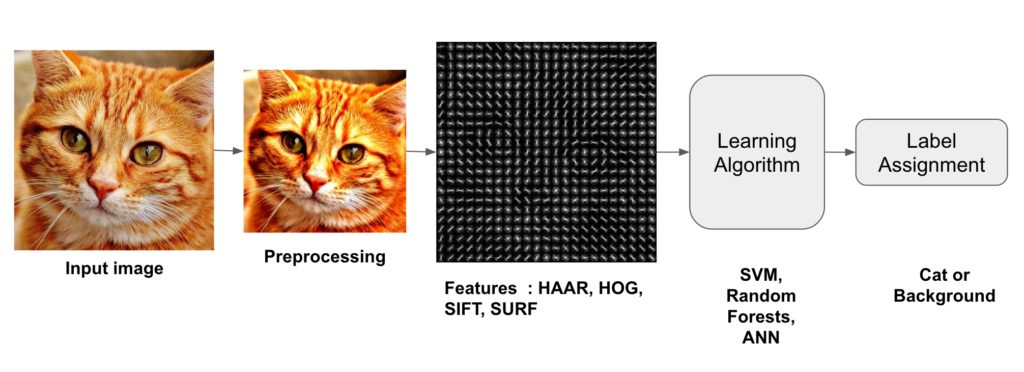

Image Classification Pipeline

Image source: https://learnopencv.com/wp-content/uploads/2016/11/image-classification-pipeline-1024x372.jpg

Class Exercise: Cat Recognition by our Turtlebot3

For today's exercise, please get into groups of 2-3. We encourage you to work individually on your own computers and communicate with your group members as you work on the exercise.

You goal in this exercise is to navigate your Turtlebot3 robot so that it moves towards the cat and stops when it is 0.2 meters in front of the cat. You will use information from the /scan and camera/rgb/image_raw ROS topics to guide your Turlebot3's movement via the /cmd_vel ROS topic.

Getting Started

To get started on this exercise, update the intro_robo class package to get the class_meeting_10_cat_recognition ROS package and starter code that we'll be using for this activity.

$ cd ~/catkin_ws/src/intro_robo

$ git pull

$ git submodule update --init --recursive

$ cd ~/catkin_ws && catkin_make

$ source devel/setup.bash Running the Code

Terminal 1: roscore

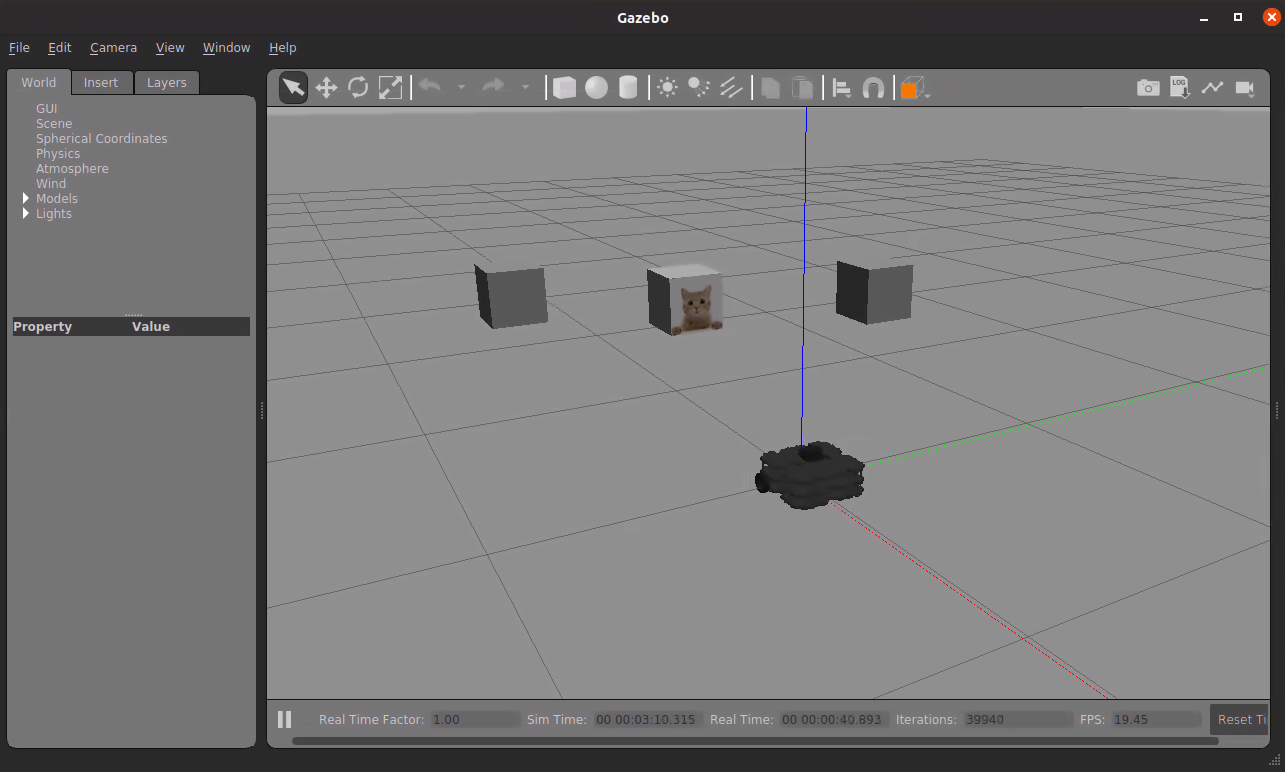

$ roscoreTerminal 2: launch the Gazebo world with the cat and the Turtlebot3

$ roslaunch class_meeting_10_cat_recognition turtlebot3_and_cat_cube.launchTerminal 3: have your Turtlebot3 turn 180° so it's facing the cat (once the Turtlebot3 has turned around, you can ctrl+C this terminal)

$ rostopic pub /gazebo/set_model_state gazebo_msgs/ModelState '{model_name: turtlebot3_waffle_pi, pose: { position: { x: 0.0, y: 0, z: 0.0 }, orientation: {x: 0, y: 0.0, z: 1.0, w: 0.0 } }, twist: { linear: { x: 0, y: 0, z: 0 }, angular: { x: 0, y: 0, z: 0} }, reference_frame: world }'Terminal 3 (after turning your robot around): run the cat recognizer ROS node starter code

$ rosrun class_meeting_10_cat_recognition cat_recognizer.py

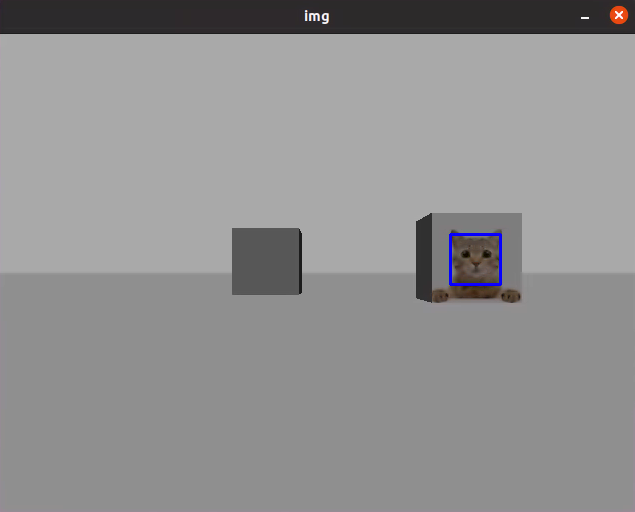

When you run cat_recognizer.py, you should see the following popup, showing that the cat is recognized in the first image that the robot receives.

Cat Recognition

The following snippets from the starter code contain the majority of the cat recognition 'magic'. This piece of code uses a pre-trained Haar feature-based cascade classifier of cat face images, which is contained in catface_detector.xml. Please refer to this OpenCV documentation page on Face Detection using Haar Cascades if you're curious to learn more.

# load the opencv2 XML classifier for cat faces

self.catface_cascade = cv2.CascadeClassifier('catface_detector.xml') ...

img = self.bridge.imgmsg_to_cv2(data, desired_encoding='bgr8')

# turn the image into a grayscale

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# using the XML classifier, we now detect cat faces in the image

cat_faces = self.catface_cascade.detectMultiScale(gray, scaleFactor=1.3, minNeighbors=5)

for (x,y,w,h) in cat_faces:

img = cv2.rectangle(img,(x,y),(x+w,y+h),(255,0,0),2)

# visualize the cat face location in the image

cv2.imshow('img',img)

cv2.waitKey(0)

cv2.destroyAllWindows()Your Goal Revisited

Armed with this information about where the cat's face is located, it's now your goal to move your robot so that it's 0.2 meters in front of the cat. You're encouraged to use both the robot's RGB camera and LiDAR to inform the robot's movement.

Acknowledgments

The content for today's class is inspired by posts on learnopencv.com and from the A Computational Introduction to Robotics course at Olin College of Engineering. Today's class exercise used code from the OpenCV documentation on Face Detection using Haar Cascades.