Lab F: Train an Image Classifier and Program Turtlebot3 to React to Different Images

Today's Lab

- Learning more about how to train an image classifier

- Knowing how to leverage outputs from neural networks to control robots

- Gaining experience with pair programming

Lab Exercise: Training an Image Classifier to allow a Turtlebot3 to React to Different Images

You will complete this exercise in pairs (ideally with your Q-learning project partner).

You will be programming a Turtlebot3 to respond to different images it "sees". The robot's behavior will change based on whether it recognizes a cat, a dog, or neither in its camera. You will need to pass the robot's camera footage to a trained neural network and use the output to command the robot to perform different behaviors described in the following narrative.

Getting Started

To get started on this exercise, update the intro_robo class package to get the

lab_f_image_classifier ROS package and starter code that we'll be using for this activity.

$ cd ~/catkin_ws/src/intro_robo

$ git pull

$ git submodule update --init --recursive

$ cd ~/catkin_ws && catkin_make

$ source devel/setup.bash Additionally, you'll need to make sure you have PyTorch installed (e.g., by executing pip3 show torch ). If you don't have PyTorch installed, run the following command to install it:

$ pip3 install torch torchvision torchaudioPart 1: Train an Image Classifier

In order for the robot to respond to different images (e.g., cat, dog, something else) it sees, we'll first need to train an image classifier that can distinguish these different classes. We'll do this work in the Jupiter Notebook defined in cifar10_tutorial.ipynb.

You can open the Jupiter Notebook by running:

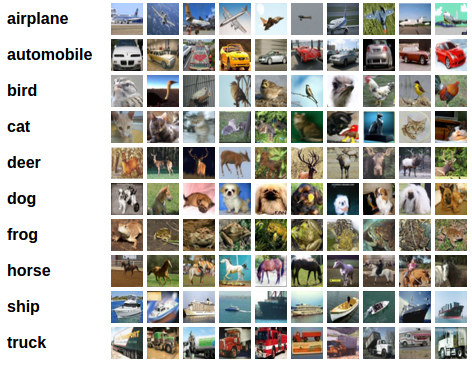

$ jupyter notebook cifar10_tutorial.ipynbNext, follow the instructions in the Jupiter Notebook to train an image classifier. Your classifier will be able to distinguish several different types of objects within an image, see below.

Part 2: Program Turtlebot3 to React to Different Images

Now that you've trained a neural network to identify different objects within an image, we'll use that trained network to allow our turtlebot to respond uniquely to seeing a cat, a dog, or neither from it's RGB camera. Since there aren't any real cats or dogs in JCL, we recommend that you bring up an images of cats, dogs, and/or other items on your phone or computer and place your phone/computer so that the robot can see it.

Robot Narrative: Imagine you are programming a curious robot named Scout. Scout is equipped with a camera that allows it to observe its surroundings in the real world. Scout is designed to be adaptive and responsive, capable of reacting to visual cues to navigate its environment safely. One day, Scout is exploring a park when its camera captures various images. Each time Scout's camera processes an image, it analyzes it to determine whether it depicts a cat, a dog, or something else entirely.

-

Encountering a Cat

- Scout's sensors detect an image of a cat. Instantly, Scout feels a surge of caution. To express its fear, Scout decides to move backward slowly, trying to create distance from the perceived threat.

-

Spotting a Dog

- Another image appears, this time showing a dog. Scout recognizes it's a different animal but isn't sure whether to be excited or wary. To show its excitement, Scout decides to spin in a joyful circle.

-

Seeing Something Else

- Sometimes, Scout's camera captures images that don't resemble cats or dogs. When this happens, Scout simply pauses, observing its surroundings but choosing not to move until the next image is processed.

Programming the Robot Scout

Now, let's get to programming. Open up robot_scout.py to see your TODOs for implemeting your robot Scout.

Running your Code

Launch Bringup on the Turtlebot. In one terminal, run:

$ roscoreIn a second terminal, run:

$ ssh pi@IP_OF_TURTLEBOT

$ set_ip LAST_THREE_DIGITS

$ bringupIn a third terminal, run the following commands to start receiving ROS messages from the Raspberry Pi camera:

$ ssh pi@IP_OF_TURTLEBOT

$ set_ip LAST_THREE_DIGITS

$ bringup_cam

bringup_cam is an alias for the command

roslaunch turtlebot3_bringup turtlebot3_rpicamera.launch.

Additionally, running bringup_cam does not open the camera feed window. You must run code

to see the camera feed.

In a fourth terminal, run the following command to decompress the camera messages:

$ rosrun image_transport republish compressed in:=raspicam_node/image raw out:=camera/rgb/image_raw

Finally, in a fifth terminal, run the ROS node for Robot Scout:

$ rosrun lab_f_image_classifier robot_scout.py

The starter code implements a helpful debugging window to help visualize the camera view.

-

If your camera view shows a black image when running the starter code (which we've observed when using the remote CSIL machines), please follow these instructions to enable

cv2.imgshow()to run on the main thread. -

If you're experiencing some opencv issues, try commenting out line 15:

cv2.namedWindow("window", 1)

Tips:

robot_scout.pyimports a class object called Net fromimage_classifier.py. See how the neural network is defined there.

Acknowledgments

The code in this lab was created by Ting-Han (Timmy) Lin and Tewodros (Teddy) Ayalew. Part of the code is taken and modified from Training a Classifier .